Wed, 25 May, 01:50 - 03:00 UTC

Deep Neural Networks: A Nonparametric Bayesian View with Local Competition

Chair: Athina Petropulu, Rutgers, The State University of New Jersey, USA

Norbert Wiener Award Recipient

Abstract

In this talk, a fully probabilistic approach to the design and training of deep neural networks will be presented. The framework is that of the nonparametric Bayesian learning. Both fully connected as well as convolutional networks (CNNs) will be discussed. The structure of the networks is not a-priori chosen. Adopting nonparametric priors for infinite binary matrices, such as the Indian Buffet Process (IBP), the number of weights as well as the number of nodes or number of kernels (in CNN) are estimated via the resulting posterior distributions. The training evolves around variational Bayesian arguments.

Besides the probabilistic arguments that are followed for the inference of the involved parameters, the nonlinearities used are neither squashing functions not rectified linear units (ReLU), which are typically used in the standard networks. Instead, inspired by neuroscientific findings, the nonlinearities comprise units of probabilistically competing linear neurons, in line with what is known as the local winner-take-all (LTWA) strategy. In each node, only one neuron fires to provide the output. Thus, neurons, in each node, are laterally (same layer) related and only one “survives”; yet, this takes place in a probabilistic context based on an underlying distribution that relates the neurons of the respective node. Such rationale mimics closer the way that the neurons in our brain co-operate.

The experiments, over a number of standard data sets, verify that highly efficient (compressed) structures are obtained in terms of the number of nodes, weights and kernels as well as in terms of bit precision requirements at no sacrifice to performance, compared to previously published state of the art research. Besides efficient modelling, such networks turn out to exhibit much higher resilience to attacks by adversarial examples, as it is demonstrated by extensive experiments and substantiated by some theoretical arguments.

The presentation mainly focuses on the concepts and the rationale behind the methodology and less on the mathematical details.

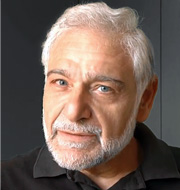

Biography

Sergios Theodoridis is currently Professor Emeritus of Signal Processing and Machine Learning in the Department of Informatics and Telecommunications of the National and Kapodistrian University of Athens, Greece and he is Distinguished Professor in Aalborg University, Denmark. His research areas lie in the cross section of Signal Processing and Machine Learning. He is the author of the book “Machine Learning: A Bayesian and Optimization Perspective” Academic Press, 2nd Ed, 2020, the co-author of the book “Pattern Recognition”, Academic Press, 4th ed. 2009, and the co-author of the book “Introduction to Pattern Recognition: A MATLAB Approach”, Academic Press, 2010. He is the co-author of seven papers that have received Best Paper Awards including the 2014 IEEE Signal Processing Magazine Best Paper Award and the 2009 IEEE Computational Intelligence Society Transactions on Neural Networks Outstanding Paper Award. He is the recipient of the 2021 IEEE SP Society Norbert Wiener Award, the 2017 EURASIP Athanasios Papoulis Award, the 2014 IEEE Signal Processing Society Education Award and the 2014 EURASIP Meritorious Service Award. He has served as Vice President IEEE Signal Processing Society, and as President of the European Association for Signal Processing (EURASIP).

He is Fellow of IET, a Corresponding Fellow of the Royal Society of Edinburgh (RSE), a Fellow of EURASIP and a Life Fellow of IEEE.