Acquiring information and knowledge about objects orbiting around earth is known as Space Situational Awareness (SSA). SSA has become an important research topic thanks to multiple large initiatives, e.g., from the European Space Agency (ESA), and from the American National Aeronautics and Space Administration (NASA). Vision-based sensors are a great source of information for SSA, especially useful for spacecraft navigation and rendezvous operations where two satellites are to meet at the same orbit and perform close-proximity operations, e.g., docking, in-space refueling, and satellite servicing. Moreover, vision-based target recognition is an important component of SSA and a crucial step towards reaching autonomy in space. However, although major advances have been made in image-based object recognition in general, very little has been tested or designed for the space environment.

State-of-art object recognition algorithms are deep learning approaches requiring large datasets for training. The lack of sufficient labelled space data has limited the efforts of the research community in developing data-driven space object recognition approaches. Indeed, in contrast to terrestrial applications, the quality of spaceborne imaging is highly dependent on many specific factors such as varying illumination conditions, low signal-to-noise ratio, and high contrast.

We propose the SPAcecraft Recognition leveraging Knowledge of space environment (SPARK) Challenge to design data-driven approaches for space target recognition, including classification and detection. The proposed SPARK challenge launches a new unique space multi-modal annotated image dataset. The SPARK dataset contains a total of ∼150k RGB images and the same number, ∼150k, of depth images of 11 object classes, with 10 spacecrafts and one class of space debris.

The data have been generated under a realistic space simulation environment, with a large diversity in sensing conditions, including extreme and challenging ones for different orbital scenarios, background noise, low signal-to-noise ratio (SNR), and high image contrast that defines actual space imagery.

This challenge offers an opportunity to benchmark existing object classification and recognition algorithms, including multi-modal approaches using both RGB and depth data. It intends to intensify research efforts on automatic space object recognition and aims to spark collaborations between the image/vision sensing community and the space community.

New unique annotated space image dataset:

| Registration Opens | 2021-Feb-05 |

| Registration Closes | 2021-Apr-01 |

| Release of Training Dataset | 2021-Apr-15 |

| Release of Validation Dataset | 2021-Apr-30 |

| Release of Testing Dataset | 2021-Jun-07 |

| Submission of Results | 2021-Jun-20 |

| Announcement of Winners | at ICIP 2021 |

LMO - https://www.lmo.space

Gigapixel videography, beyond the resolution of a single camera and human visual perception, aims to capture large-scale dynamic scenes with extremely high resolution. Benefiting from the high resolution and wide FoV, it leads to new challenges and opportunities for a large amount of computer vision tasks. Among them, object detection is a typical task to locate the target that belongs to the category of interest in images or videos. However, accurate object detection in large-scale scenes is still difficult due to the low image-quality of the instances in the distance. Although the gigapixel videography can capture both the wide-FoV scene and the high-resolution local details, how to deal with these high-resolution data effectively and overcome the huge change of object scale has not been well studied.

The purpose of this challenge at ICIP 2021 aims to encourage and highlight novel strategies with a focus on robustness and accuracy in various scenes, which have a vast variance of the pedestrian pose, scale, occlusion, and trajectory. This is expected to be achieved by applying novel neural network architectures, incorporating prior knowledge insights and constraints. PANDA dataset (website: www.panda-dataset.com) will be used for this challenge, which consists of 555 representative images captured by gigapixel cameras in a variety of places. The ground-truth annotations include 111.8k bounding-boxes with fine-grained attribute labels including person pose and vehicle category.

| Registration Opens | 2021-Apr-15 |

| Training Data Available | 2021-Apr-30 |

| Testing Data Available | 2021-May-30 |

| Results Submission Deadline | 2021-Jun-14 |

| Challenge Results Announcement | 2021-Aug-20 |

Highly reflective and transparent objects (namely challenging object, e.g., glasses and mirrors) are very common in our daily lives, yet their unique visual and optical properties severely degrade the performance of many computer vision algorithms when used in practice.

(a)

(b)

(c)

(d)

Fig 1. Problems with challenging object in existing vision tasks.

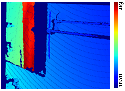

As shown in Fig 1, segmentation algorithm wrongly segments the instances inside the mirror (Fig 1(a)), or segments the instances behind the glass but is not aware that they are actually behind the glass (Fig 1(b)). Many 3D computer vision tasks (e.g., depth estimation and 3D reconstruction) and 3D cameras also suffer from these challenge objects due to their optical properties (Figs 1(c-d)). These challenging objects limit the application of scene-understanding algorithms in the industry. Therefore, it is essential to detect and segment these challenging objects.

Recently, researchers have paid attention to challenging object segmentation and constructed some challenging object datasets. These works only focus on certain types of challenging objects (e.g., mirrors or drinking glasses) rather than general highly reflective and transparent objects.

This proposal provides a unified challenging-object semantic-segmentation dataset, which contains both highly reflective and transparent objects (e.g., mirrors, mirror-like objects, drinking glasses and transparent plastic), which is more general than other above-mentioned datasets of challenging objects. The proposed dataset allows researchers to make profound improvements for challenging object segmentation. It also can promote the progress of related research areas (robot grasping, 3D pose estimation and 3D reconstruction for challenging object).

| Registration Opens | 2021-Mar-31 |

| Data Available | 2021-Mar-31 |

| Submission Deadline | 2021-Jul-30 |

| Release of the evaluation result | 2021-Aug-27 |

| GC Sessions, Winners Announcement | 2021-Sep-20 |